Matters

Accountable AI

The Accountable AI initiative appears at how organizations outline and method accountable AI practices, insurance policies, and requirements. Drawing on international govt surveys and smaller, curated skilled panels, this system gathers views from numerous sectors and geographies with the goal of delivering actionable insights on this nascent but vital focus space for leaders throughout trade.

Extra on this collection

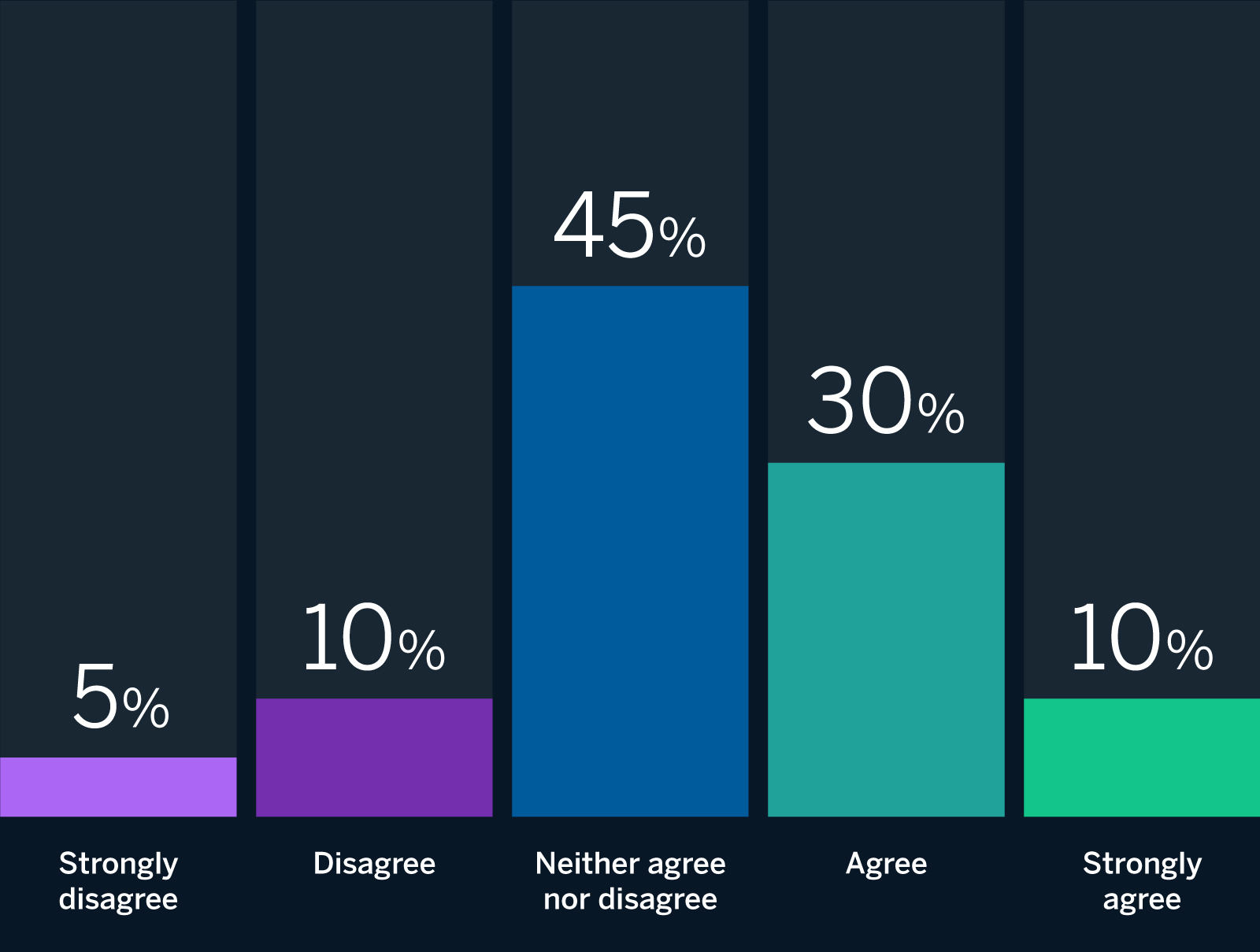

MIT Sloan Administration Evaluate and BCG have assembled a world panel of AI consultants that features lecturers and practitioners to assist us acquire insights into how accountable synthetic intelligence (RAI) is being applied in organizations worldwide. This month, we requested our skilled panelists for reactions to the next provocation: Executives normally consider RAI as a know-how challenge. The outcomes had been wide-ranging, with 40% (8 out of 20) of our panelists both agreeing or strongly agreeing with the assertion; 15% (3 out of 20) disagreeing or strongly disagreeing with it; and 45% (9 out of 20) expressing ambivalence, neither agreeing nor disagreeing. Whereas our panelists differ on whether or not this sentiment is extensively held amongst executives, a large fraction argue that it depends upon which executives you ask. Our consultants additionally contend that views are altering, with some providing concepts on speed up this alteration.

In September 2022, we printed the outcomes of a analysis examine titled “To Be a Accountable AI Chief, Concentrate on Being Accountable.” Beneath, we share insights from our panelists and draw on our personal observations and expertise engaged on RAI initiatives to supply suggestions on persuade executives that RAI is greater than only a know-how challenge.

The Panelists Reply

Executives normally consider RAI as a know-how challenge.

Whereas lots of our panelists personally imagine that RAI is greater than only a know-how challenge, they acknowledge some executives harbor a narrower perspective.

Supply: Accountable AI panel of 20 consultants in synthetic intelligence technique.

Responses from the 2022 International Govt Survey

Lower than one-third of organizations report that their RAI initiatives are led by technical leaders, akin to a CIO or CTO.

Supply: MIT SMR survey information excluding respondents from Africa and China mixed with Africa and China complement information fielded in-country; n=1,202.

Executives’ Various Views on RAI

A lot of our consultants are reluctant to generalize in terms of C-suite perceptions of RAI. For Linda Leopold, head of accountable AI and information at H&M Group, “Executives, in addition to material consultants, typically have a look at accountable AI by means of the lens of their very own space of experience (whether or not it’s information science, human rights, sustainability, or one thing else), maybe not seeing the total spectrum of it.” Belona Sonna, a Ph.D. candidate within the Humanising Machine Intelligence program on the Australian Nationwide College, agrees that “whereas these with a technical background suppose that the problem of RAI is about constructing an environment friendly and sturdy mannequin, these with a social background suppose that it is kind of a option to have a mannequin that’s in keeping with societal values.” Ashley Casovan, the Accountable AI Institute’s govt director, equally contends that “it actually depends upon the manager, their function, the tradition of their group, their expertise with the oversight of different varieties of applied sciences, and competing priorities.”

The extent to which an govt views RAI as a know-how challenge could rely not solely on their very own background and experience but in addition on the character of their group’s enterprise and the way a lot it makes use of AI to realize outcomes. As Aisha Naseer, analysis director at Huawei Applied sciences (UK), explains, “Firms that don’t cope with AI when it comes to both their enterprise/merchandise (which means they promote non-AI items/providers) or operations (that’s, they’ve totally handbook organizational processes) could not pay any heed or cater the necessity to care about RAI, however they nonetheless should care about accountable enterprise. Therefore, it depends upon the character of their enterprise and the extent to which AI is built-in into their organizational processes.” In sum, the extent to which executives view RAI as a know-how challenge depends upon the person and organizational context.

An Overemphasis on Technological Options

Whereas lots of our panelists personally imagine that RAI is greater than only a know-how challenge, they acknowledge that some executives nonetheless harbor a narrower perspective. For instance, Katia Walsh, senior vice chairman and chief international technique and AI officer at Levi Strauss & Co., argues that “accountable AI ought to be a part of the values of the total group, simply as vital as different key pillars, akin to sustainability; range, fairness, and inclusion; and contributions to creating a constructive distinction in society and the world. In abstract, accountable AI ought to be a core challenge for a corporation, not relegated to know-how solely.”

However David R. Hardoon, chief information and AI officer at UnionBank of the Philippines, observes that the fact is commonly completely different, noting, “The dominant method undertaken by many organizations towards establishing RAI is a technological one, such because the implementation of platforms and options for the event of RAI.” Our international survey tells an analogous story, with 31% of organizations reporting that their RAI initiatives are led by technical leaders, akin to a CIO or CTO.

A number of of our panelists contend that executives can place an excessive amount of emphasis on know-how, believing that know-how will clear up all of their RAI-related considerations. As Casovan places it, “Some executives see RAI as only a know-how challenge that may be resolved with statistical assessments or good-quality information.” Nitzan Mekel-Bobrov, eBay’s chief AI officer, shares related considerations, explaining that, “Executives normally perceive that using AI has implications past know-how, significantly regarding authorized, danger, and compliance concerns, [but] RAI as an answer framework for addressing these concerns is normally seen as purely a know-how challenge.” He provides, “There’s a pervasive false impression that know-how can clear up all of the considerations in regards to the potential misuse of AI.”

Our analysis means that RAI Leaders (organizations making a philosophical and materials dedication to RAI) don’t imagine that know-how can totally tackle the misuse of AI. In actual fact, our international survey discovered that RAI Leaders contain 56% extra roles of their RAI initiatives than Non-Leaders (4.6 for Leaders versus 2.9 for Non-Leaders). Leaders acknowledge the significance of together with a broad set of stakeholders past people in technical roles.

Neither agree nor disagree

Attitudes Towards RAI Are Altering

Even when some executives nonetheless view RAI as primarily a know-how challenge, our panelists imagine that attitudes towards RAI are evolving as a result of rising consciousness and appreciation for RAI-related considerations. As Naseer explains, “Though most executives contemplate RAI a know-how challenge, as a result of current efforts round producing consciousness on this subject, the pattern is now altering.” Equally, Francesca Rossi, IBM’s AI Ethics international chief, observes, “Whereas this will have been true till a couple of years in the past, now most executives perceive that RAI means addressing sociotechnological points that require sociotechnological options.” Lastly, Simon Chesterman, senior director of AI governance at AI Singapore, argues that “like company social accountability, sustainability, and respect for privateness, RAI is on monitor to maneuver from being one thing for IT departments or communications to fret about to being a bottom-line consideration” — in different phrases, it’s evolving from a “good to have” to a “will need to have.”

For some panelists, these altering attitudes towards RAI correspond to a shift round trade’s views on AI itself. As Oarabile Mudongo, a researcher on the Heart for AI and Digital Coverage, observes, “C-suite attitudes about AI and its utility are altering.” Likewise, Slawek Kierner, senior vice chairman of knowledge, platforms, and machine studying at Intuitive, posits that “current geopolitical occasions elevated the sensitivity of executives towards range and ethics, whereas the profitable trade transformations pushed by AI have made it a strategic subject. RAI is on the intersection of each and therefore makes it to the boardroom agenda.”

For Vipin Gopal, chief information and analytics officer at Eli Lilly, this evolution depends upon ranges of AI maturity: “There’s growing recognition that RAI is a broader enterprise challenge moderately than a pure tech challenge [but organizations that are] within the earlier levels of AI maturation have but to make this journey.” However, Gopal believes that “it’s only a matter of time earlier than the overwhelming majority of organizations contemplate RAI to be a enterprise subject and handle it as such.”

Finally, a broader view of RAI could require cultural or organizational transformation. Paula Goldman, chief moral and humane use officer at Salesforce, argues that “tech ethics is as a lot about altering tradition as it’s about know-how,” including that “accountable AI could be achieved solely as soon as it’s owned by everybody within the group.” Mudongo agrees that “realizing the total potential of RAI calls for a metamorphosis in organizational considering.”

Uniting numerous viewpoints may help. Richard Benjamins, chief AI and information strategist at Telefónica, asserts that executive-level leaders ought to be sure that technical AI groups and extra socially oriented ESG (environmental, social, and governance) groups “are linked and orchestrate an in depth collaboration to speed up the implementation of accountable AI.” Equally, Casovan means that “the perfect state of affairs is to have shared accountability by means of a complete governance board representing each the enterprise, technologists, coverage, authorized, and different stakeholders.” In our survey, we discovered that Leaders are almost thrice as seemingly as Non-Leaders (28% versus 10%) to have an RAI committee or board.

Suggestions

For organizations looking for to make sure that their C-suite views RAI as greater than only a know-how challenge, we advocate the next:

- Deliver numerous voices collectively. Executives have various views of RAI, typically based mostly on their very own backgrounds and experience. It’s vital to embrace real multi- and interdisciplinarity amongst these in command of designing, implementing, and overseeing RAI applications.

- Embrace nontechnical options. Executives ought to perceive that mature RAI requires going past technical options to challenges posed by applied sciences like AI. They need to embrace each technical and nontechnical options, together with a big selection of insurance policies and structural modifications, as a part of their RAI program.

- Concentrate on tradition. Finally, as Mekel-Bobrov explains, going past a slim, technological view of RAI requires a “company tradition that embeds RAI practices into the conventional manner of doing enterprise.” Domesticate a tradition of accountability inside your group.